The rise of generative AI tools may have leveled the on-page SEO playing field. But search engines are smart.

Recent updates in their algorithms and ranking systems now mean that user experience (UX) and technical SEO will play a more pivotal role in B2B website search rankings than before.

Technical SEO interprets your website's content and design elements in a way that search engines understand. Otherwise, your content won't rank, no matter how much value readers can get from it.

In this article, you'll learn the fundamental technical SEO best-practices to build a strong online brand, outperform your competition, and drive qualified traffic to your website.

The Role of AI in SEO

Search engines share one goal: To provide users with the most relevant and helpful content on their search result pages (SERPs) to solve users' problems.

There are over 6 billion webpages (and counting) on the Web; search engines determine which sites to display through two distinct processes:

- The first is crawling. As the name suggests, this process is when search bots crawl the Web to find newly published or updated content.

- Once a site is found, bots start the next process, called indexing. This process is more complex because it's where bots analyze each content based on search ranking factors and signals and relevance to the search query.

Generative AI tools, such as ChatGPT, have made content creation less daunting. However, those tools don't always generate content structured for search engine bots to crawl and index easily.

That's why technical SEO is crucial in a successful B2B SEO strategy. It ensures search bots easily access and understand what's on your website, index them, and, eventually, display them in relevant search results.

Initial Website Audit

Every successful SEO strategy starts with conducting a comprehensive website audit because it helps identify crawling and indexing issues and what's causing them.

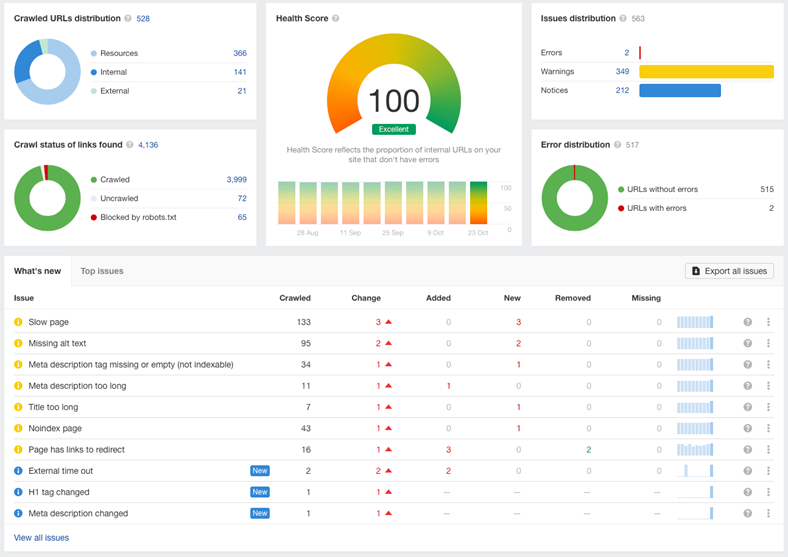

Ahrefs and other, similar tools can help you speed up the process by scanning your website for these issues and then compiling them in a comprehensive report, like this:

Sample of a website audit report generated by Ahrefs

For example, the report above shows that several webpages are missing essential meta tags. You can then click on each of those missing meta tags to get a list of the affected webpage URLs so you can resolve those issues.

Optimizing Website Structure

Search engines put a premium on your website's UX for two reasons.

First, a positive website UX makes it easier for website visitors to find what they're looking for, significantly increasing metrics, such as average time on page and clickthrough rate (the percentage of website visitors that clicked a link on your webpage).

It also directly affects search bots' ability to crawl and index webpages.

In the fifth episode of the Search of the Record podcast, Google Developer Advocate Martin Splitt explains that search bots have a crawl budget: how many webpages they can crawl within a specific timeframe.

A complicated and unorganized website structure can prevent it from completing the crawl in the allotted time. As a result, some of your website's pages won't be crawled, indexed, and included in SERPs for relevant queries.

To ensure an easily and quickly crawlable site, organize your webpages so it takes your users only up to three clicks to find what they're looking for.

Doing so prevents users from getting frustrated as they navigate through your website. It'll also minimize the chances that search bots will exceed their crawl budget, ensuring every page on your site is crawled and indexed.

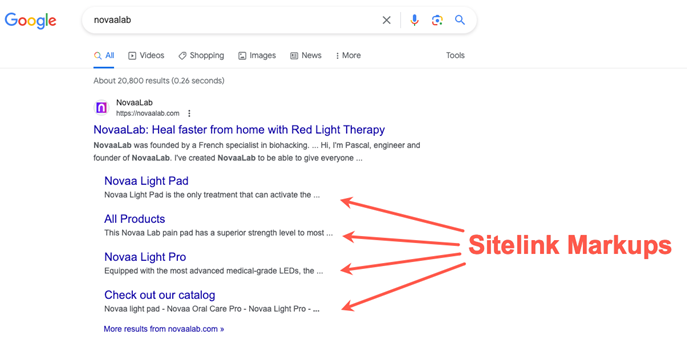

Another way to optimize your site's structure is to implement a schema markup—code that translates every element in your website in a way that search engines can easily understand. It also provides users with more in-depth information about your website.

For example, sitelink schema markups allow a client of ours, Novaalab, to include links to its product pages, making them easier to find and visit:

Enhancing Website Speed and Mobile Optimization

More than half (55%) of search traffic comes from mobile devices, so optimizing your website with a mobile-first approach is imperative.

Pageload time is one thing that can make or break a website's UX, especially when it's viewed on a mobile device.

Fully 64% of mobile users expect a website to load in under four seconds. Any longer than that, and they leave.

Various factors can cause slow pageload times.

- One is having too many interactive elements on your website running JavaScript. Before displaying a website, a Web browser performs render-blocking JavaScript, which essentially involves loading all the JavaScript files embedded in your website. Doing so ensures that it will function as intended when it displays your website. The process is called render-blocking because the browser won't display your website until it loads all the embedded JavaScript files, resulting in a slow pageload time.

- Another reason is that your website's not connected to a content delivery network (CDN), a series of networks containing copies of your website. When someone visits your website, the browser downloads a copy of your website from the nearest server based on your site visitor's location, decreasing its load time.

Google's PageSpeed Insights tool, along with others, can help identify issues affecting your site's loading time on desktop and mobile.

Then, to resolve those issues, you can share the report with your website developer or a B2B SEO service provider specializing in technical SEO.

Leveraging XML Sitemaps and Robots.txt

XML Sitemaps and robot.txt tell search bots which pages you want to crawl and index and which pages you don't want them to access. They help conserve your website's crawl budget.

They also support lead generation initiatives by ensuring things like gated content won't be indexed and displayed on SERPs.

It's therefore crucial to submit an updated XML sitemap to Google each time you perform a major update on your website, like adding a client portal to house all your intake forms and keep track of your services.

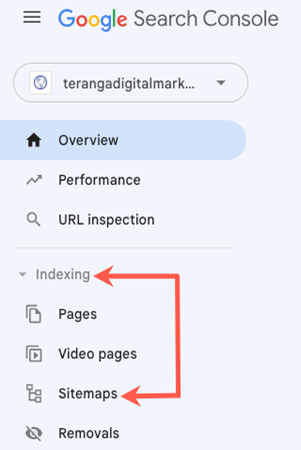

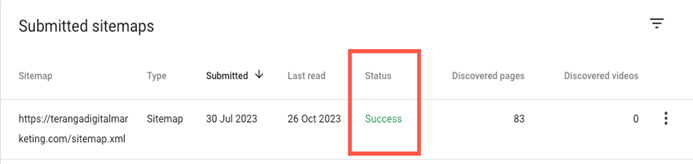

You can do so by clicking "Sitemaps" under "Indexing," located on the left sidebar of your website's Google Search Console account.

Paste your sitemap's URL under "Add a new sitemap" and click "Submit."

GSC will notify you once Google has finished processing your sitemap.

Monitoring and Analyzing Website Performance

Applying technical SEO best-practices isn't a one-time event. It's an ongoing process that involves regularly checking your technical SEO metrics so you can quickly identify and resolve issues affecting your site's search visibility and rankings.

At the very least, you should conduct a site health check once a month to check for any broken links, pages that weren't indexed, and pages that search bots can't access, just to name a few. That way, you can make the necessary changes to resolve issues and improve your website's performance on SERPs.

Make it also a habit to stay informed with the latest SEO industry trends and algorithm updates from Google and SEO industry experts. Doing so will help you adjust your SEO strategy and stay ahead of your competitors.

* * *

Even though you might have a unique, user-friendly, and responsive website packed with valuable content, it won't do much to help you achieve your business goals if search engines can't find it and share it with users.

Implementing the suggestions in this article will ensure that search bots can properly crawl and index the critical pages of your website, improving the chances that your target audience finds your website and visits it.

More Resources on Technical SEO

When Great Content Isn't Enough: Remember Technical SEO If You Want Your Content to Rank