Google Search Console (previously Google Webmaster Tools) is a free service by Google that allows you to check your website's indexing status and to optimize its visibility for searchers.

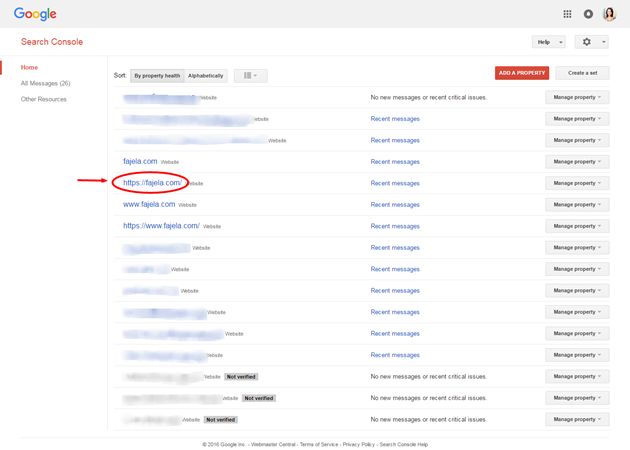

To adjust your website in Google Search Console, you need to go to the dashboard and select your site's main property. If it's www, then select that choice. If you have an SSL certificate, you'll have to select the property with "https" (with or without www—depending on your main mirror).

Let's start from the Search Appearance. We'll go from top to bottom.

Search Appearance

Structured Data

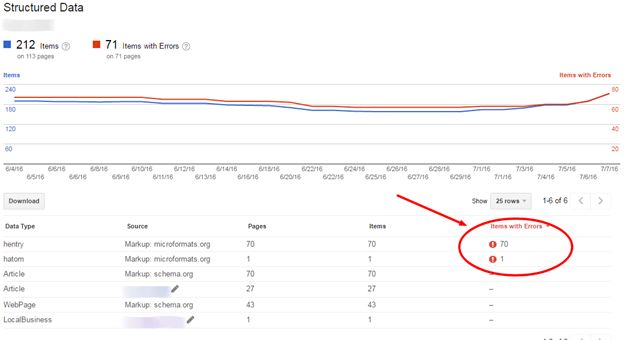

This is where you can see whether Google finds any structured data on your website (including manual highlights from Data Highlighter, which we'll be looking at more closely later on). If there are any errors, there will be a notification that looks like this:

Microformats with errors don't work, so it's better either to either remove them or to fix the issue. If schema.org is already implemented correctly for your site, you can just remove those formats with errors. Good markup will help search engines to better understand the contents of your webpages. There's no direct evidence that those may improve your rankings, but rich snippets designed wisely (not for the sake of appearance) influence the user experience (UX); user behavior, in turn, implicitly affects rankings.

Rich Cards

These are, again, part of structured data. You should definitely use rich cards if your website provides one of these types of information:

- Recipes

- Events

- Products

- Reviews

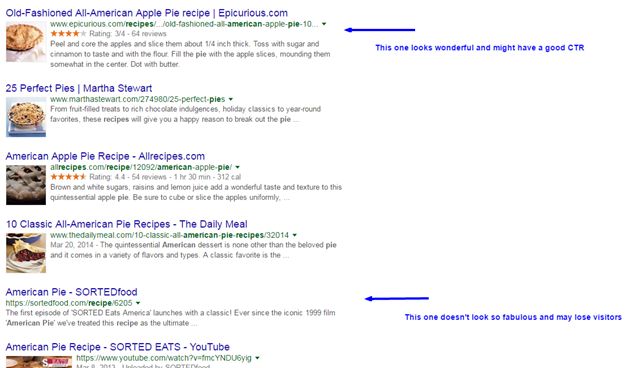

Your snippet in search will look fantastic, for example in the case of recipe pages, where images are allowed.

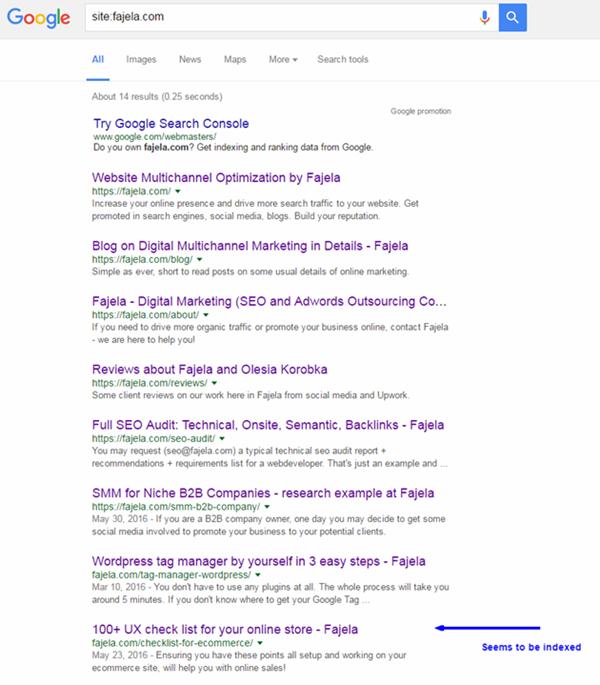

Data Highlighter

Sometimes, you have certain limitations and cannot modify your website to input microdata. You should use Data Highlighter then. It's very easy to use and Google has detailed instructions for it. It allows you to mark similar pages in a group one at a time. I do recommend using it, even if you have structured data on your website, especially in the case of new sites. Sometimes it helps to find out whether some pages that you thought had been indexed are in fact not indexed.

Example:

The same page when you check it for being indexed:

HTML Improvements

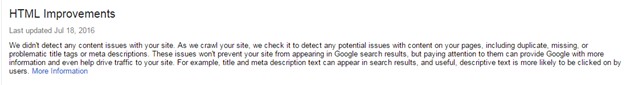

Here's what you should see ideally in this section:

However, if you have some on-page SEO issues, you will see those. They usually include...

- Title tags

- Meta description

- Non-indexable content

- The problems with those may be as follows:

- Missing tags

- Duplicates

- Long tags

- Short tags

- Non-informative

I recommend you deal with those even for the pages that are not promoted.

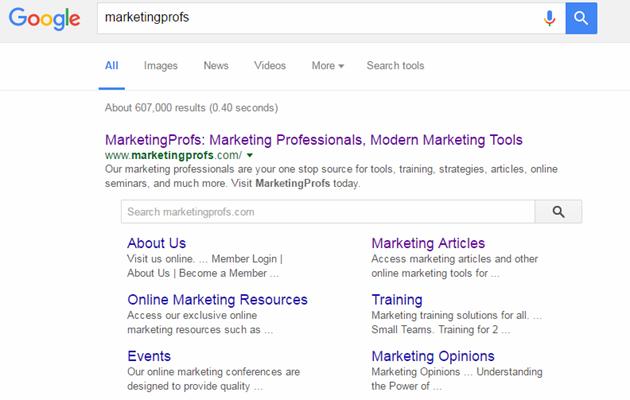

Sitelinks

This section is usually used in two cases.

The first is when you want to demote the link from search. For example, if you have two pages ranked for the same keyword and the one you are promoting is ranked lower in search, then you may want to demote the other one.

Otherwise, you may want to polish your branding search results.

If you want another page to be shown under your main URL, you should demote the one you don't want to be there. A very simple and yet effective tip.

There are also Accelerated Mobile Pages in the Search Appearance section. We won't look at those in detail here, because that can be another very long article.

Search Traffic

Search Analytics

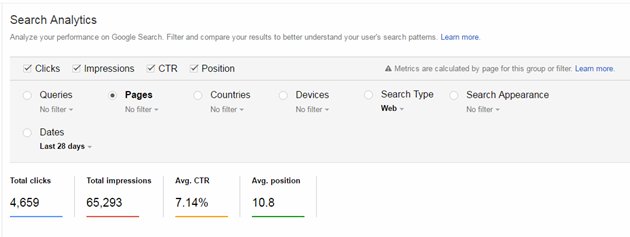

This is much more convenient to look at in your Google Analytics account; however, there's a lot of marketing data here as well. And it's another, indirect method to see whether your page is indexed; if there are no visitors from search, and it's not even mentioned there, then it's highly probable that it's not indexed.

You may also analyze some actual queries, pages, countries, etc. (see image, below). It is also very helpful for analyzing how many image or video clicks you get from search (filter > Search Type > Image/Video). And here you can estimate the clicks on your pages in search that were shown with optimized snippets. In this case, it seems worth it:

Links to Your Site

You can track how many new links appear to your website. This information may be important when you have to deal with spam link attacks from your competitors; if that's the case, you'd have to check it daily, and swiftly add the new spam links to disavow.

In other cases, it's much more convenient to track it from your Analytics account; there you'd be able to see the exact pages visitors are coming to your site from (Referral Traffic).

Internal Links

If your promoted page is important, it should have a relevant number of internal links leading to it. Again, if you have one page getting ranked for a certain keyword instead of another you are promoting, just check how many internal links lead to both of them (and also the anchors of those links). The promoted page's internal links should outnumber others' and should also have relevant anchor text.

Manual Actions

You'd usually receive a notice if there are any manual actions to your site (usually because of webspam). However, if you suddenly face a dramatic search-traffic decrease, come to this section to check whether there are any problems.

International Targeting

You can check your webpages' language settings here (if hreflang is configured properly), and specify your country targeting in cases when your domain lets you do so. If you have a regional domain, you won't be able to; the country will be detected automatically.

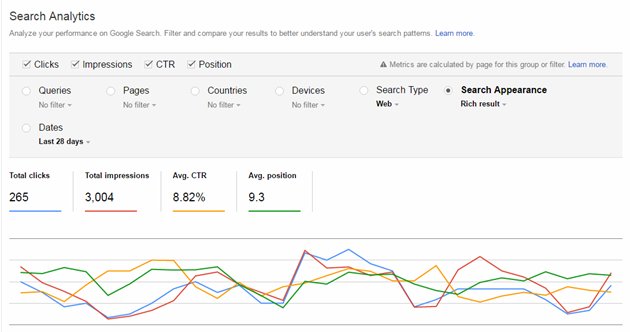

Mobile Usability

The information here is the same as in Google PageSpeed Insights. However, here you may see how many pages (and which ones) actually have the problems listed. Those are also available to download, so that you can send the file to your webmaster to fix the issue.

Google Index

Index Status

You can see how many pages are indexed by Google, but you cannot see which ones. The number is often less than the one you get with "site:" operator in Google. It's useful to compare the numbers to try to figure out which pages are not in index and why.

Content Keywords

These influence your website topic relevance. If you want to get ranked, say, for marketing, but have nothing associated with that in your top 20 keywords, you'll have hard times in trying to move your site to the top. It's worth keeping in mind, however, that keyword stuffing may lead to your website's being penalized. Thus, some balance has to be kept. Don't start to stuff your page with commercial keywords. Try adding them bit by bit, and measure the results.

Blocked Resources

Your rating in Google falls if your robots.txt blocks some important js, css, or image files on your website. That is easy to deal with; if you find any problems here, Google help will guide you on how to unblock those.

Remove URLs

Sometimes, after hiring a webmaster (and quarreling with them until the sparks fly), you may find that your website is not longer visible in search. If it's a WordPress site, you'd go and check whether it's blocked in wp-admin. You'd also check the robots.txt and everywhere else. If no other issues are found, come and see this section; there may be a removal request here. It that's the issue, remove the request, and wait till your website is indexed again.

The same goes for your other pages that are not indexed: Check them here.

There may also be a case when you don't want one of your pages to be visible through search. You've already excluded it from the sitemap and added it to robots.txt, but it's still there. You should then add it in this section.

Crawl

Crawl Errors

Some crawl errors are critical, others are not. From time to time, there may appear very strange URLs that you don't really have on your website. That can be some old data (deleted content still stored in the cache) and Google may keep it for months until it finally removes it.

But errors can reflect real problems on your website. You shouldn't have real pages with a 403-404 response. If there are any, just fix the issue swiftly. Sometimes, it helps if you improve your indexing.

Finally, when suddenly you are starting to have lots of 404 pages that don't really exist, and there's no problem with your website, that may be, again, your competitors buying spam links to your website. If that's the case, delete those by marking errors as fixed and adding the resources that provide those links to your disavow file.

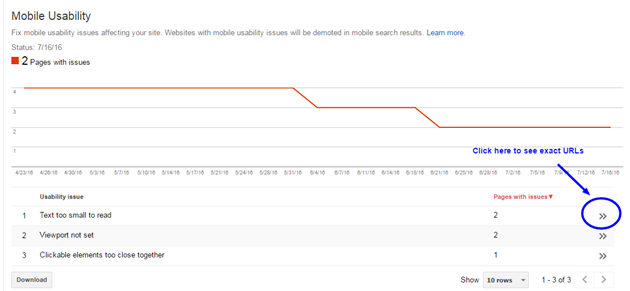

Crawl Stats

See how often Google bot visits your site. It's also useful to look at the "time spent downloading the page" report—the highest and the average times. Try not to have more than three seconds—and the less, the better.

Fetch as Google

Here you may see how Google renders your pages. Ideally, that should not be different from how your visitors actually see the pages.

If you have a page that is not indexed or is new and you want Google to get to know about it ASAP, submit it here. Unfortunately, you cannot force Google to index it instantly, but you should try.

Robots.txt Tester

You should see 0 Errors and 0 Warnings, and your actual robots.txt contents, of course. If you have a new website, submit your robots file here and test it.

Sitemaps

Submit your sitemap here. It may take several hours before it gets processed, however. There have to be no errors and no warnings. If there are any, fix them ASAP.

You should also test your sitemap the first time you submit it. If you had some indexing problems, then fixed the issues; you may also resubmit your sitemap.

Security Issues

These are very important. A site with security issues may be kicked out from search in seconds, and it takes a crazy amount of effort and time to restore the rank positions. It's better to prevent such issues.

Other Resources

There's a Help Center along with lots of resources provided by Google to help you manage your Search Console properly; there's also a Google+ group and forum where you can ask questions if you still don't understand something.