If Facebook were a country, it would be the biggest one... which is why it's so important for marketers to understand how to advertise effectively on Facebook.

A successful Facebook ad can make a huge impact on a potentially huge audience.

Part of the Plan

I can't overemphasize how important testing is to creating successful ad campaigns.

When you start to brainstorm your ad, look for ways to bake-in testing: From the start, come up with multiple headlines; get a few different images to try; plan different versions of the ad, each testing one distinct variable.

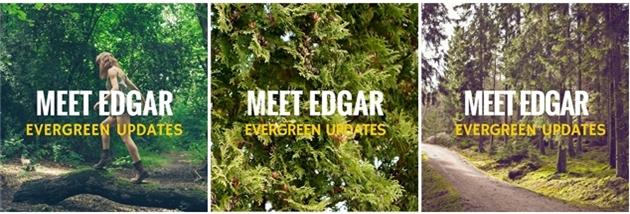

An example of testing one variable at a time: same headline, different image. And wouldn't you know it, the one with the hiker performed almost twice as well as the others.

Have Your Goals in Mind

What are you trying to do, and how will you measure success? There are a lot of ways you can evaluate the effectiveness of your ads, but the metric we rely on most at Edgar is cost per action. Simply put, this is how much, on average, you have to spend to get the action you're looking for.

For example, if you're advertising on Facebook to get more likes for your page, how much are you spending for each like?

- Amount spent: $45

- Number of likes gained: 90

- Cost per like: $0.50 (Amount/Likes)

Breaking down the numbers so you can compare the cost of each individual result helps make it easier to judge the effectiveness of your ads. The lower your cost per action, the more effective your ad.

Get Scientific

In science, one of the rules of a good experiment is that you test only one variable at a time. The same rules apply with advertising on Facebook (or anywhere else online). If you change only one thing at a time, you'll have a better chance of finding the things that really make an impact.

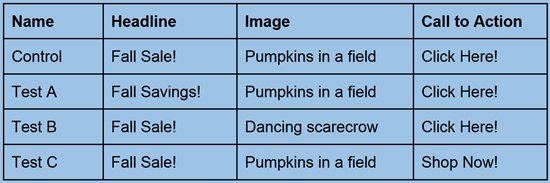

Say you have an ad with one headline, one image, and one call to action. You could test changes to any one of those elements (try three different headlines, for example, but keep everything else the same) or you could run a more complex test, trying different versions of each variable:

- Control: default version of the ad

- Test A: same as Control, but with a different headline

- Test B: same as Control, but with a different image

- Test C: same as Control, but with a different call to action

With some (admittedly terrible) copy in place, that might look like this:

Creating a such a rubric will let you study exactly what works, and what doesn't, which is how you'll be able to create better and more effective ads.

Keep an open mind and trust the results; sometimes, you'll get great results where you least expect them, whereas the "sure-fire ideas" simply fall flat.

Sample Size: the More, the Merrier

Creating a split test like the one above is efficient and effective... so long as it reaches enough people to get viable results.

Determining just how large of a sample you need can take some pretty serious math, so we'll just say in general that bigger is better. You may need only a few hundred responses to see a definite trend, but sometimes it takes far more than that.

If you're testing something that will be seen only by a relatively small number of people, consider testing only an A and a B version (again, with just one variable difference) rather than the more elaborate four-way split shown above.

You can always run consecutive rounds of A/B tests to test multiple changes without thinning out your sample size. For example, your first test would pit the control against Test A. Your next test would pit the winner of that against Test B, and so on. It's still effective testing, it just takes more time.

Conversely, if you have a massive audience and want to test multiple versions of each component, go for it. As long as you test one variable at a time, have a solid rubric to track results, and have a sample size large enough to get meaningful data, you'll be just fine.

It Just Gets Better

You've conducted your test, you've come up with some winners, and now it's time to call it a day, right? Not at all. After your first round of testing, it's time to plan your next series of tests!

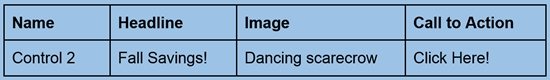

Using our test example above and testing for the lowest cost per action, let's pretend that we found the control CTA worked better, but that the tested headline and image were winners. Slap them all together and use that as the new control. It'd look something like this:

Come up with some new ideas to make this approach work even better, and launch the next round of your ad. And be sure to compare your results against the first round of testing; sometimes you'll find that combining all of the winning elements into a should-be-super ad just doesn't work the way you'd expect.

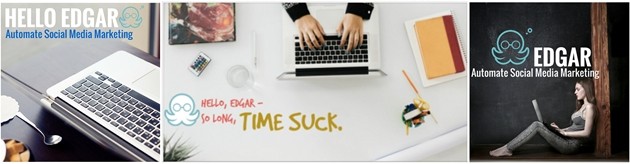

Advanced testing: these ads were winners of their respective rounds of testing, so we pitted them all against each other. The winning ad (with the woman in the skinny jeans) cost 72% less per action than the most costly ad in the set.

Keep coming up with new variables and testing new iterations of your ad until your campaign has hit its goals. By continually working to find the lowest cost per action, you'll ensure the most effective spend of your advertising dollars.

Yes, it's more work than just making one ad and letting it run. But it's also a lot more fun! You're a marketer. Testing is what you do.

After all, testing isn't a means to an end. It's a way of life.